© ISTOCKPHOTO.COM/ELLICA_S Even as Eugene Garfield proposed the impact factor more than half a century ago, he had reservations. “I expected it to be used constructively while recognizing that in the wrong hands it might be abused,” he said in a presentation he gave at the International Congress on Peer Review and Biomedical Publication in September 2005.

© ISTOCKPHOTO.COM/ELLICA_S Even as Eugene Garfield proposed the impact factor more than half a century ago, he had reservations. “I expected it to be used constructively while recognizing that in the wrong hands it might be abused,” he said in a presentation he gave at the International Congress on Peer Review and Biomedical Publication in September 2005.

Indeed, while Garfield had intended the measure to help scientists search for bibliographic references, impact factor (IF) was quickly adopted to assess the influence of particular journals and, not long after, of individual scientists. It has since become a divisive term in the scientific community, with young researchers still striving to demonstrate their worthy within the confines of an antiquated publishing system. In the meantime, waves of criticisms against impact factor have arisen, including the difficulty in consistent reproduction and the easy manipulation of its value (e.g., by encouraging self-citations). “In 1955, it...

A journal’s IF may be defined as the average number of citations accrued by research papers, reviews, and other citable articles published in that journal during the two preceding years. For example, if in 2015 a given journal received an average of 10 citations for each article published in 2013 and 2014, its impact factor in 2015 is 10. However, the algorithm is defective in that it endows disproportionate significance to very few highly cited papers. According to a Nature editorial published last July, IF is a “crude and misleading” statistic that is bound to bring about biased assessment of scientific journals and the performance of publishing research scientists.

Accordingly, the Nature Publishing Group has updated its metric list to include a two-year median number of citations, instead of the average number used by IF. This limits the influence of a few highly cited papers. However, the two-year time window is still inappropriate for many disciplines with large temporal variation in citation numbers.

To address this, another metric, known as the h5 index, is gaining popularity. Proposed by Google Scholar Metrics in 2012, the h5 of a given journal represents the largest number of articles that have been cited at least that many times each during the preceding five years. For example, if a given journal has published 10 papers, each of which has been cited at least 10 times during the past five years, its h5 index is 10. But h5 is also an imperfect solution, as it is still influenced by the volume of papers published and the scope of journals. In other words, high-yield journals will naturally garner higher h5 values, regardless of overall quality.

In 2015, the National Institutes of Health (NIH) introduced yet another metric, termed the relative citation ratio (RCR), and has since begun using the RCR in the agency’s funding decisions. The methodology, which is transparent and freely available, works based on the performance of an article against the average citations of its field’s journals. However, some experts find the RCR “too complicated and too restrictive,” bibliometric specialist Lutz Bornmann of Max Planck Society in Munich told Nature last November, adding that he has no plans to adopt it.

Clearly, a new, more-balanced metric is still needed to accurately capture a given journal’s academic level in terms of high-quality and high-impact articles.

Rethinking the metric

The ideal metric should represent the citation number of relatively high-quality papers published by that journal. In this vein, we propose an Indicator (Id) that is calculated by multiplying the proportion of high-quality papers (out of the total number of published papers) by the total number of citations a journal receives:

where Id is the Indicator, Nh is the number of high-quality papers, Nt is the total number of papers, and Ct is the total number of citations the papers published in a given journal receive. This formula can be written as:

where Id is the Indicator, Nh is the number of high-quality papers, Nt is the total number of papers, and Ct is the total number of citations the papers published in a given journal receive. This formula can be written as:

Here,

Here, ![]() is similar to IF by virtue of its method of calculation, while Nh can be represented by the h5 value. Thus, the Id formula can again be rewritten as:

is similar to IF by virtue of its method of calculation, while Nh can be represented by the h5 value. Thus, the Id formula can again be rewritten as:

where dividing h5 by five adjusts for the five-year time restriction of the h5 metric. In this form, it’s clear that Id can be calculated through simple multiplication of the two currently available metrics, IF and h5. We have therefore termed this new indicator the IF-h5.

Performance of the IF-h5

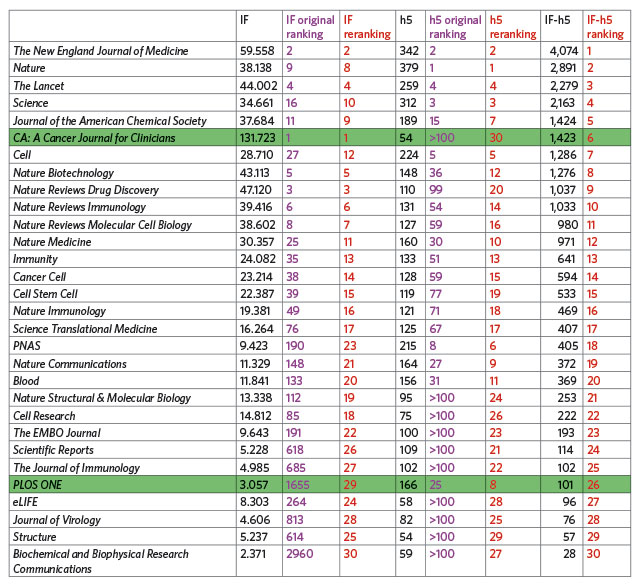

To evaluate the performance of our proposed indicator, we obtained both IF and h5 values in 2015 and calculated the corresponding IF-h5 values of 30 selected journals. These journals have IFs ranging from less than 3 to more than 100, and they represent publications covering both single and multiple disciplines, as well as different publishers.

As shown in Table 1, the pattern of IF-h5 ranking is distinctly different from that of either IF or h5, which themselves are dramatically different from each other. As an example, we can compare two highlighted journals in the table: CA: A Cancer Journal for Clinicians (CA Cancer J Clin) and PLOS ONE. CA Cancer J Clin ranks first in IF (both IF original ranking, based on 11,991 journals, and IF reranking, based only on the 30 selected journals), but unexpectedly it ranks below 100th in the h5 original ranking (based on the top 100 journals in the h5 index) and last in the h5 reranking (based only on the 30 selected journals). In contrast, PLOS ONE ranks 1,655th in IF among all journals and 29th in the IF reranking, but its h5 original ranking and reranking jump to 25 and 8, respectively.

The discrepancy between IF and h5 rankings could be attributed to the huge difference in the number of papers published in these two journals. From 2010 to 2014, CA Cancer J Clin published fewer than 200 papers, while PLOS ONE published more than 100,000 papers, according to Web of Science. However, when ranked by IF-h5, CA Cancer J Clin is 6th among the 30 selected journals, while PLOS ONE is 26th. These new rankings seem to find a mathematical balance between their respective IF and h5 rankings.

Our proposed IF-h5 measure thus offsets the deficiencies of both IF and h5. First, it eliminates the otherwise crippling effect of a few highly cited papers, which can artificially inflate a journal’s IF. Second, unlike h5, it avoids the unbiased impact of total number of papers published and instead emphasizes publication quality. Furthermore, IF-h5 can be easily calculated through simple multiplication of IF and h5, the two available metrics that can be obtained from the Journal of Citation Reports and Google Scholar, respectively, without resorting to additional statistics.

Based on these analyses, we propose IF-h5 as a new, rational metric for the academic evaluation of journals. While IF-h5 must undergo further testing and verification before it can be implemented as a bona fide evaluation method, we argue that it is poised to lay the foundation for a more diverse and balanced evaluation system.

Comparison of IF, h5, and IF-h5 for 30 selected journals

Comparison of IF, h5, and IF-h5 for 30 selected journals

IF original rankings are based on 11,991 journals; IF rerankings are based on the 30 selected journals listed in this table. Similarly, h5 original rankings are based on the top 100 journals in the h5 index, whileh5 rerankings are based on the 30 selected journals only. IF-h5 ranking is based on 30 selected journals listed in this table.

Yan Wang is an editor in Shanghai Information Center for Life Sciences and Institute of Biochemistry and Cell Biology, Shanghai Institutes for Biological Sciences, Chinese Academy of Sciences. Haoyang Li is a PhD candidate and Shibo Jiang is a professor of microbiology at Shanghai Medical College of Fudan University.

Interested in reading more?