In 2000, the type 2 diabetes drug Rezulin was withdrawn from the market after several dozen patients required a liver transplant or died due to liver failure. While the vast majority of drugs that make it to market are safe, such high-profile failures show that the drug development process is far from flawless.

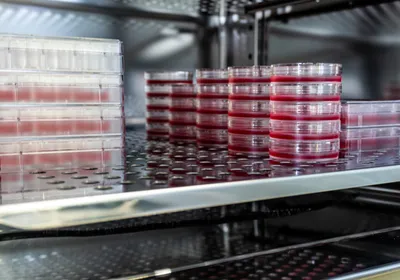

The good news is that the number of targets has increased, as has the supply and demand for high-throughput assays. However, this new revolution in biomedical science has not resulted in a pipeline brimming with new drugs. While chemical entities that show biological activity against a given target can become lead compounds, those compounds still need to be turned into viable drugs.

The typical compound entering a Phase I clinical trial has been through roughly a decade of rigorous pre-clinical testing, but still only has an 8% chance of reaching the market.1 Some of this high attrition ...