Kenneth J. Oh is a global marketing manager for Bio-Rad.

In this current age of rapid scientific advancement, the integrity of published data has never been more critical, or more vulnerable. As technologies evolve and the number of published papers climbs exponentially, so too does the risk of scientific misconduct, whether through deliberate fraud or accidental oversight. A key issue lies in the use of digital imaging and, in particular, the manipulation of western blot images: a fundamental research tool that has become a focal point for integrity investigations.

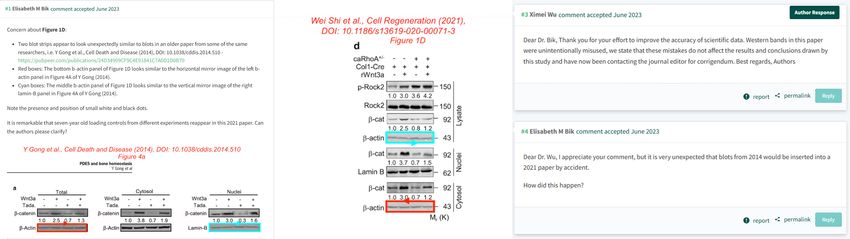

Few figures are as central to this movement for accountability as Elisabeth Bik, a microbiologist and scientific integrity consultant whose meticulous forensic work has flagged duplicated or manipulated images across a range of published papers. These findings have contributed to numerous retractions and corrections,1 and they have also triggered high-profile conversations around transparency and scientific integrity.

Bik has a trained eye for spotting irregularities in western blot images.

One of the most prominent cases hit the headlines in 2023, when Marc Tessier-Lavigne, then-president of Stanford University and a renowned neuroscientist, stepped down following an eight-month investigation into image manipulation allegations.* Many of the irregularities involved reused western blot images presented as distinct experiments or duplications spread across multiple studies.2-5 Early on, Bik was one of the experts to raise concerns about these papers on Pubpeer, an online platform that facilitates post-publication peer review. Her findings then helped reignite calls for greater public scrutiny of not only individual authors but also of the entire scientific publication system.

In this article, Bik shared insights into her forensic work, the tools she uses, and the challenges posed by both human error and emerging technologies such as AI.

The Eyes Have It: Manual Inspection in a Digital Age

Bik’s early work was painstaking, performed in the evenings and weekends: “When I started doing this work back in 2014, I had no choice but to rely solely on my eyes as there were no available tools to my knowledge at the time.” She continued, “After work, I’d go home, sit behind my computer, visually scanning through over 20,000 papers looking for duplications.” Findings from her first study were published in 2016, demonstrating 782 instances of image duplication, including 196 published papers containing manipulated duplicated figures.6

Today, she works with image analysis tools such as Imagetwin and Proofig AI, which are designed to detect duplicated images in scientific papers. However, she warns against over-reliance on automation: “Although such tools allow you to simply drag and drop a PDF and scan for duplications, they might still produce false positives or false negatives, especially when images have very low contrast,” she noted. “Even though I now use software to support my efforts, I also visually inspect everything as well since a trained eye remains indispensable.”

AI Poses Both a Solution and a Threat

The role of AI in image forensics is double-edged. While some AI tools can aid in pattern recognition and duplication detection, others, particularly generative models, pose new risks. “If anyone alters or duplicates images, they will likely leave traces for me to find. But if you train an AI tool using the right material, it might soon be possible to produce very realistic images, including western blots, immunohistochemistry slides, or even photos of cells, mice, plants and so on, that our current tools might miss entirely,” she said. Bik points to what she calls the tadpole paper mill, where researchers appeared to reuse tadpole-looking blot bands, all pasted onto identical western blot backgrounds. “It looked like they were using possibly a precursor of generative AI, or at least an image bank,” she noted. “Back in 2020, science sleuths caught over 400 papers because of that mistake,7 but today, AI-generated images are almost perfect, which is very worrisome as it could make those images much harder to detect.”

The Case for Raw Data and Institutional Oversight

Bik supports the inclusion of raw image data at the time of manuscript submission to help ensure data integrity. “It makes it harder to manipulate data if the raw images are already available,” she commented. Similarly, requiring raw data early in the process can act as a deterrent: “If a problem is discovered later, you can go back and look at what was originally submitted.” Bik pointed out that more journals and institutions are now proactively screening incoming manuscripts, which is reducing incidents of data manipulation. She is therefore advocating for more institutions to take a stronger stance on data retention and oversight, including offering digital notebooks and secure data storage.

Beyond raw image access, Bik supports more stringent peer-review protocols, especially for imaging experiments—highlighting that, in many instances, problems arise because of author negligence, which includes overlapping images or use of duplicated panels. “While some cases show clear signs of photoshopping, many seem to be a result of carelessness,” she said. “It’s frustrating because even though the errors are often corrected quickly, a pattern emerges. In some labs, up to one-third of the papers have visible duplications. Reviewers tend to skim over complex, multi-panel figures, which means these duplications may easily go unnoticed.”

A screenshot from Pubpeer showcases an example of Elisabeth Bik’s concerns regarding western blot image duplication in published papers.

Pubpeer

Safeguarding Trust Through Data Integrity

Maintaining data integrity is essential to upholding trust in the scientific process. It ensures that published results accurately reflect experimental reality, preventing misinformation that could mislead research, waste resources, and damage public confidence. In techniques like western blotting and gel electrophoresis, the quality of scientific data depends on accurate, secure handling from acquisition to analysis. Instrument software plays a vital role integrating audit trails, encrypted storage, and user access controls to ensure data integrity and reliability. These features are especially important in regulated environments, where compliance with standards for electronic records and signatures, such as FDA 21 CFR Part 11 and EU GMP Annex 11, is required.

As imaging technologies and analytical techniques continue to advance, it is essential that the underlying systems and workflows evolve in tandem. Adopting a sector-wide approach to maintaining data integrity by combining human oversight, technical safeguards, and regulatory compliance may ultimately be the key to ensuring credibility and reproducibility of science.

* Editor's note: The outcome of a legal investigation found that Marc Tessier-Lavigne did not personally engage in research misconduct, did not have actual knowledge of any manipulation of research data, and was not in a position where a reasonable scientist would be expected to have detected any such misconduct. (Updated October 9, 2025)

- Shi W, et al. RhoA/Rock activation represents a new mechanism for inactivating Wnt/β-catenin signaling in the aging-associated bone loss [published correction appears in Cell Regen. 2024 Feb 10;13(1):3]. Cell Regen. 2021;10(1):8.

- Stein E, et al. Binding of DCC by netrin-1 to mediate axon guidance independent of adenosine A2B receptor activation [retracted]. Science. 2001;291(5510):1976-1982.

- Stein E, Tessier-Lavigne M. Hierarchical organization of guidance receptors: silencing of netrin attraction by slit through a Robo/DCC receptor complex [retracted]. Science. 2001;291(5510):1928-1938.

- Nikolaev A, et al. APP binds DR6 to trigger axon pruning and neuron death via distinct caspases [retracted] Nature. 2009;457(7232):981-989.

- Lu X, et al. The netrin receptor UNC5B mediates guidance events controlling morphogenesis of the vascular system. Nature. 2004;432(7014):179-186.

- Bik EM, et al. The prevalence of inappropriate image duplication in biomedical research publications. mBio. 2016;7(3):e00809-16.

- Byrne JA, Christopher J. Digital magic, or the dark arts of the 21st century-how can journals and peer reviewers detect manuscripts and publications from paper mills?. FEBS Lett. 2020;594(4):583-589.