For nearly two decades, many scientists have relied on the h-index—which measures the productivity and citation impact of a researcher’s publications—as a yardstick of academic success.1 However, several researchers criticize the metric for considering all of an individual’s papers equally, irrespective of whether they are a first, middle, or corresponding author.

Gaurav Sharma, a microbial genomics and data analytics researcher at the Indian Institute of Technology Hyderabad, and his team sought to change this. They developed a new data analytics tool available as a browser extension, called GScholarLens, that adjusts citation weightings by authorship order and roles. Sharma and his team described the framework behind the tool in a preprint that has not been peer-reviewed yet but drew mixed opinions from researchers.2 While some noted the ease of using the tool and appreciated the reasoning that went into developing it, others questioned whether academics need one more metric to measure scientific impact.

GScholarLens Assigns a Normalized Scholar H-Index

To arrive at a normalized scholar h-index, or Sh-index, Sharma and his team designed GScholarLens to fetch information based on the authorship order on researchers’ Google Scholar pages. The tool assigns a maximum weight of 100 percent to the corresponding author, meaning all of the citations that their paper receives count toward their Sh-index. First authors receive a weighting of 90 percent of the total citations.

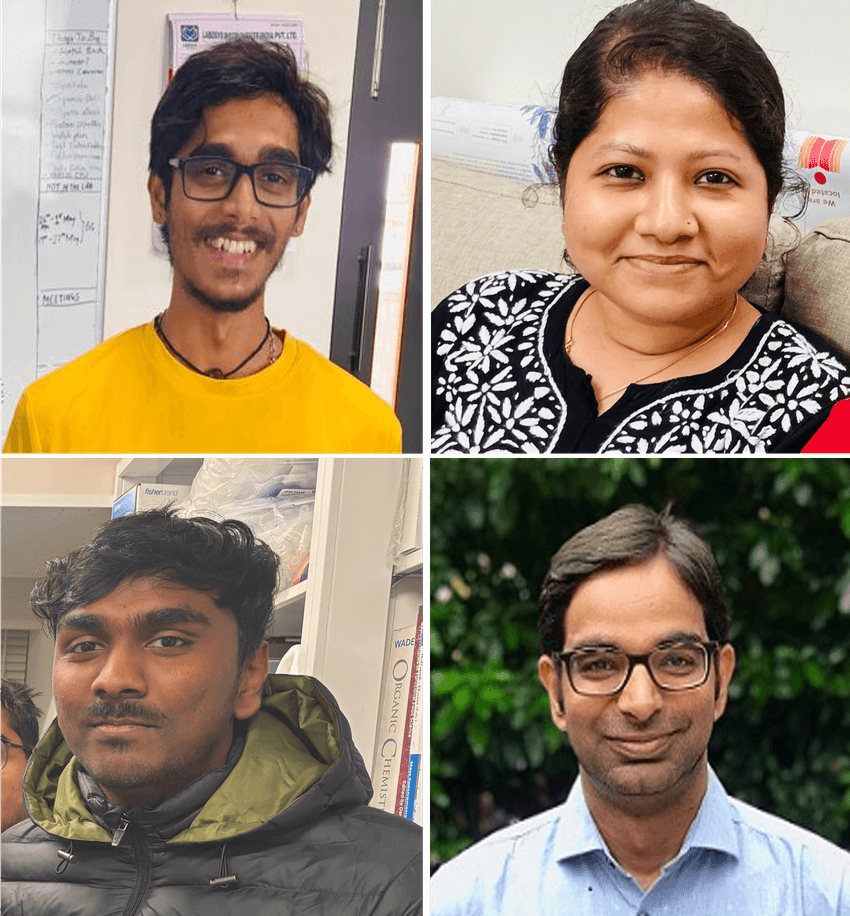

Gaurav Sharma (bottom right) and his team members Vishvesh Karthik, Utkarsha Mahanta and Indupalli Sishir Anand (clockwise) developed GScholarLens, a tool that calculates adjusted h-index based on authorship order.

Gaurav Sharma

“A corresponding author is bringing the ideology behind the project, bringing the funding behind the project in most of the cases,” said Sharma. Moreover, the corresponding authors take responsibility of the research and are the points-of-contact for communication about the work. Because the first author usually does most of the work, the team decided to assign almost, but not exactly, equal weightage to them.

Second authors receive 50 percent weightage, and other coauthors get 25 percent each if there are six or fewer authors; if there are seven or more authors, each middle coauthor is assigned 10 percent of the weighting.

“These are arbitrary numbers,” said Sharma. “There is no scientific proof [with which] we can justify this. But again, you have to bring arbitrariness somewhere. Even h-index is arbitrary. Frankly citations are arbitrary. General impact factors are arbitrary.”

According to Sharma, the Sh-index gives a more complete picture of a researcher’s productivity and impact. For instance, some researchers largely participate in collaborative projects and contribute relatively little, but they still accumulate authorship and citations, which add to their h-index. The Sh-index offers clearer insights into whether they have led projects, he explained.

GScholarLens Received Mix Reviews

“I believe [this metric] is much more elegant and much more nuanced than previous versions of it,” said Marios Georgakis, a cardiovascular geneticist at Ludwig Maximilian University of Munich who was not associated with the preprint.

Mike Thelwall, a data scientist at the University of Sheffield, who was not part of the team, agreed. “Weightages given to the authors [are] a commonsense change, which is good,” he said. “It’s a step forward. It’s definitely a step forward.”

Marios Georgakis is a cardiovascular geneticist at Ludwig Maximilian University of Munich. While he appreciated the nuance behind GScholarLens, he questioned whether the academic community needs another metric to quantify research output.

Marios Georgakis

However, Jodi Schneider, an information scientist at the University of Wisconsin-Madison, who was also not part of the team, disagreed. “The effort that different authors put in is not commensurate with the impact of their contributions. It might be low effort, but it [could have a] high impact,” she said. For instance, a middle coauthor may have generated a dataset on which the rest of the paper depends entirely, even if the first author undertook most of the analysis, she explained.

Both Schneider and Thelwall noted that the tool would work well only for select fields because some fields like mathematics and economics have papers with several authors. Sharma agreed, “The tool which we have created is only focused on biological sciences and chemistry…fields where authorship has a weightage.”

Despite their opposing views about the logic behind it, Schneider and Thelwall agreed that the tool is well-developed. “I particularly like the fact that it makes it easy to access through a browser extension. That's a good idea,” said Thelwall. “They've thought through how to make a very simple system once you install it,” said Schneider.

According to Sharma, the team wanted the tool to be easy-to-use and offer transparency about everyone with a Google Scholar profile. “Any person, scholar, PhD student, or intern should be able to run this tool on any profile and see how many papers [one has] published as a first or lead author,” he said.

Can the H-Index Be Improved?

While Thelwall noted that the adjustments Sharma and his team have made to the h-index seem reasonable, he added, “The problem I have with it first [is] the h-index is a bad index.”

Jodi Schneider is an information scientist at the University of Wisconsin-Madison, who works at the intersection of scientific data and how it can be used, managed and understood.

© School of Information Sciences, University of Illinois Urbana-Champaign/ Thompson-McClellan Photography

Schneider agreed. “The h-index is [meaningless]. We don't need to fix the h-index. We can't fix the h-index.”

First proposed as a measure of scientific output in theoretical physics in 2005, several other fields co-opted the h-index to quantify success. While researchers observed a link between a scholar’s h-index and them receiving awards such as the Nobel Prize early on, this correlation has substantially declined in the past few years.3,4

Scientists also realized that the h-index is biased and sexist: Older men who are less likely to have had career breaks have higher h-indices compared to young and female researchers. Despite this, the h-index became an important factor in hiring-, promotion-, and funding-related decisions.

“I'm not even a follower of [the] h-index,” admitted Sharma, adding that he would probably be the happiest person seeing such metrics get scrapped. “[But] I don’t see it getting removed,” he added.

Do Researchers Really Need One More Measure?

Mike Thelwall is a data scientist at the University of Sheffield, who researches citation analysis.

Mike Thelwall

Beyond the logic and logistics behind GScholarLens, researchers noted a bigger question: What is the point of science, and what do metrics like the h-index or Sh-index incentivize?

“What's really important is how do we design the scientific system so that it works for what society wants and for what society needs,” said Schneider. “Counting publications is never going to get us to a place that is better.”

Georgakis agreed, adding that the academic community is too focused on quantifying output based on metrics. “At the end of the day, the main output is how our discoveries and our work impact future research.” He added that an important measure of the impact of biomedical research would be to see whether the discoveries have reached the people who need them.

“Is it really worth investing so much energy and thought towards developing such tools, or should all this energy just be better dedicated towards just doing research and trying to evolve the field that everyone's working on?” he said.

Sharma agreed that academics focus too much on quantifying output. “Science cannot be calculated, but in the end, it is happening. All the grant agencies, all the institutions, everywhere they are calculating it,” he said. “We cannot run away from it.”

- Hirsch JE. An index to quantify an individual's scientific research output. Proc Natl Acad Sci USA. 2005;102(46):16569-16572.

- Karthik V, et al. Authorship-contribution normalized Sh-index and citations are better research output indicators. arXiv. 2025;2509.04124.

- Bornmann L, Daniel HD. What do we know about the h index? J Am Soc Inf Sci. 2007;58: 1381-1385.

- Koltun V, Hafner D. The h-index is no longer an effective correlate of scientific reputation. PLoS One. 2021;16(6):e0253397.