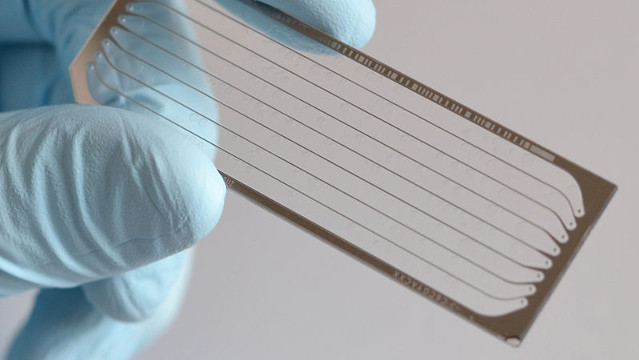

WIKIMEDIA, BAINSCOUFor clinical purposes, next-generation sequencing (NGS) has all but replaced its methodological predecessor, Sanger sequencing. It is faster. It is cheaper. But is next-gen sequencing alone sensitive and specific enough to catch every difficult-to-detect, disease-associated variant while avoiding false-positives?

WIKIMEDIA, BAINSCOUFor clinical purposes, next-generation sequencing (NGS) has all but replaced its methodological predecessor, Sanger sequencing. It is faster. It is cheaper. But is next-gen sequencing alone sensitive and specific enough to catch every difficult-to-detect, disease-associated variant while avoiding false-positives?

“There is significant debate within the diagnostics community regarding the necessity of confirming NGS variant calls by Sanger sequencing, considering that numerous laboratories report having 100% specificity from the NGS data alone,” Ambry Genetics Chief Executive Officer Aaron Elliott and colleagues wrote in a study published last week (October 6) in The Journal of Molecular Diagnostics.

Elliott and colleagues simulated a false-positive rate of zero when comparing the results of 20,000 hereditary cancer, NGS panels—including 47 disease-NGS alone, the researchers “missed [the] detection of 176 Sanger-confirmed variants, the majority in complex genomic regions (n = 114) and mosaic mutations (n = 7),” they reported in their paper.

In an interview with The Scientist, Elliott lamented a lack of quality-control guidelines regarding confirmatory sequencing methods among ...