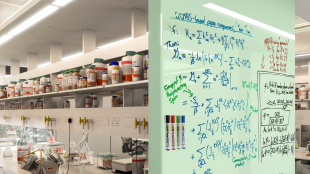

FLICKR, UNIVERSITY OF EXETERRecent years have seen increasing numbers of retractions, higher rates of misconduct and fraud, and general problems of data irreproducibility, spurring the National Institutes of Health (NIH) and others to launch initiatives to improve the quality of research results. Yesterday (April 7), at this year’s American Association for Cancer Research (AACR) meeting, researchers gathered in San Diego, California, to discuss why these problems to come to a head—and how to fix them.

FLICKR, UNIVERSITY OF EXETERRecent years have seen increasing numbers of retractions, higher rates of misconduct and fraud, and general problems of data irreproducibility, spurring the National Institutes of Health (NIH) and others to launch initiatives to improve the quality of research results. Yesterday (April 7), at this year’s American Association for Cancer Research (AACR) meeting, researchers gathered in San Diego, California, to discuss why these problems to come to a head—and how to fix them.

“We really have to change our culture and that will not be easy,” said Lee Ellis from the University of Texas MD Anderson Cancer Center, referring to the immense pressure researchers often feel to produce splashy results and publish in high-impact journals. Ellis emphasized that it is particularly important in biomedical research to ensure that the data coming out of basic research studies—which motivate human testing—is accurate. “Before we start a clinical trial, we’d better be sure this has some potential to help our patients,” he said.

C. Glenn Begley, chief scientific officer of TetraLogic Pharmaceuticals and former vice president of hematology and oncology research at Amgen, discussed a project undertaken by Amgen researchers to reproduce the results of more than 50 published studies. The ...