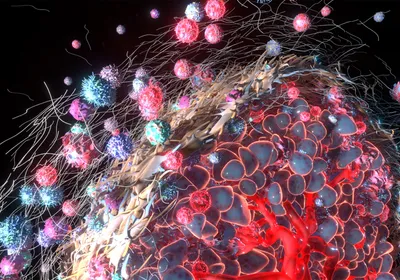

ABOVE: © MOLLY MENDOZA

It’s the question on every cancer patient’s mind: How long have I got? Genomicist Michael Snyder wishes he had answers.

For now, all physicians can do is lump patients with similar cancers into large groups and guess that they’ll have the same drug responses or prognoses as others in the group. But their methods of assigning people to these groups are coarse and imperfect, and often based on data collected by human eyeballs.

“When pathologists read images, only sixty percent of the time do they agree,” says Snyder, director of the Center for Genomics and Personalized Medicine at Stanford University. In 2013, he and then–graduate student Kun-Hsing Yu wondered if artificial intelligence could provide more-accurate predictions.

Yu fed histology images into a machine learning algorithm, along with pathologist-determined diagnoses, training it to distinguish lung cancer from normal tissue, and two different types of lung cancer from ...